One of the big problem of Deep Learning is its “deep part”, you need to present thousands of datapoint to get a good learning.

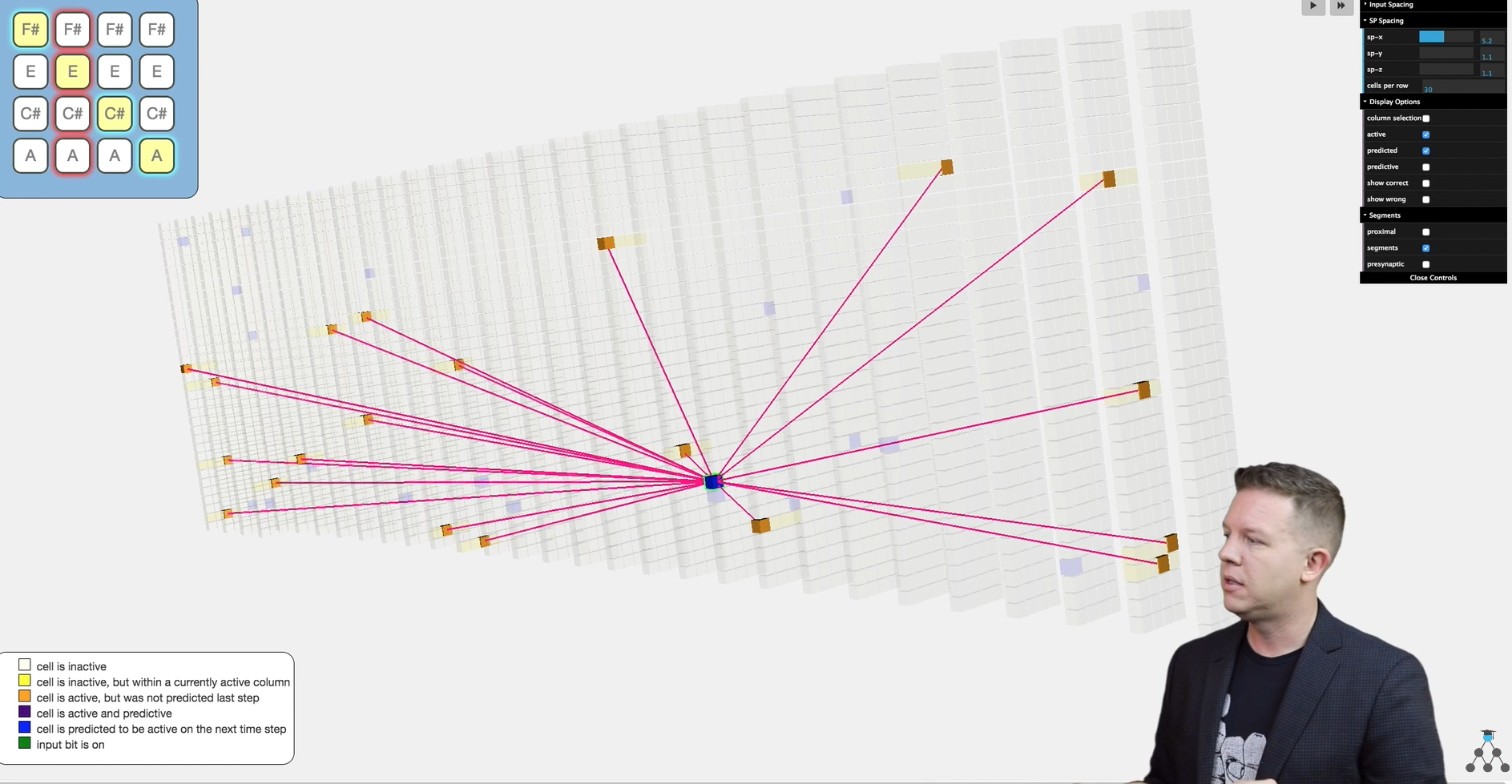

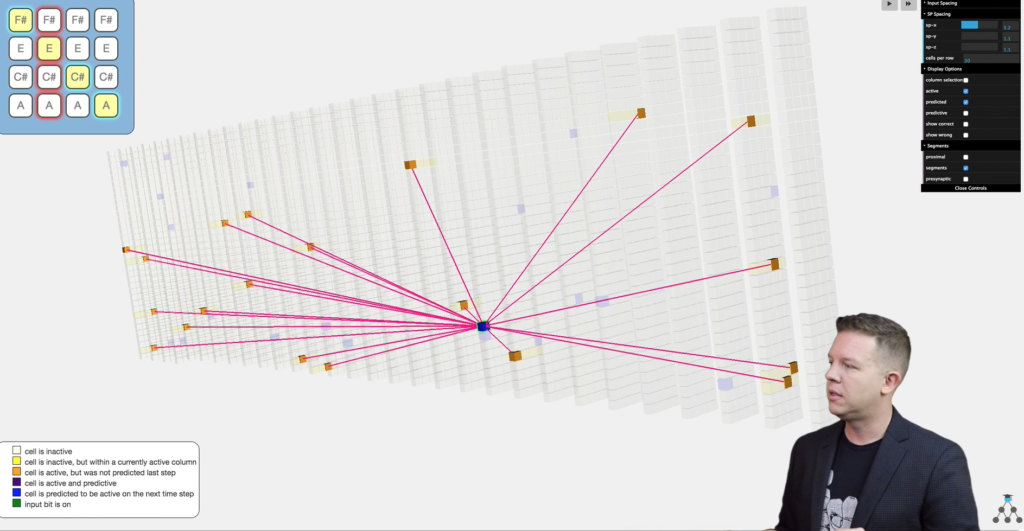

Hierarchical Temporal Memory (HTM) is a technic to model the neo cortex in a much more realistic way than deep learning. While many people have made fun of this technics, I was totally ununware of it. Siraj (excellent youtuber https://www.youtube.com/channel/UCWN3xxRkmTPmbKwht9FuE5A ) did speak about it recently, and I immediately got obsesed to implement a tiny chatbot with it … I swallowed all the training videos in a matter of hours (2X speed helps), then, I got to the keyboard and spend a full day (about 10 hours) to code the 1st system with NuPic ( https://github.com/numenta/nupic )… Natural language is fairly easy to debug, that is why I picked a Chatbot as example.

- Learning: Can not find an easy way to run the inference of NuPic on CoreML … outch! The API of CoreML will have to be extended, and more basic operation will have to be added in the Neural net including in A12. (Expected, when you are 1st at machine chips, you often forget one posibility of the system)

- Looking at the 1st baby code I did with HTM, it did quickly learn, a lot faster than Deep Learning … The inferences are blazing fast too. It does not match the Deep Learning chatbot in term of quality of the answer yet, there are some serious wrong answers in the middle of it, but it is now beating on iOS, and x86. I got some other goal to complete because I come back to it, but this is astonishly promissing, the learning is a matter of minutes instead of days…

Sooner or later, the Efficiency of Hierarchical Temporal Memory (HTM) will allow it to take over Deep Learning, it is a matter of time.

Next Steps:

- Matching Deep Learning in term of quality of the prediction

- Try to use NuPic to match deepSpeech2 prediction stats.