That is the math of the effort To get the most extensive conversation database on social media …

let’s hope it does work as I am hoping at the end, I ll May look like this if it does not come together nicely:

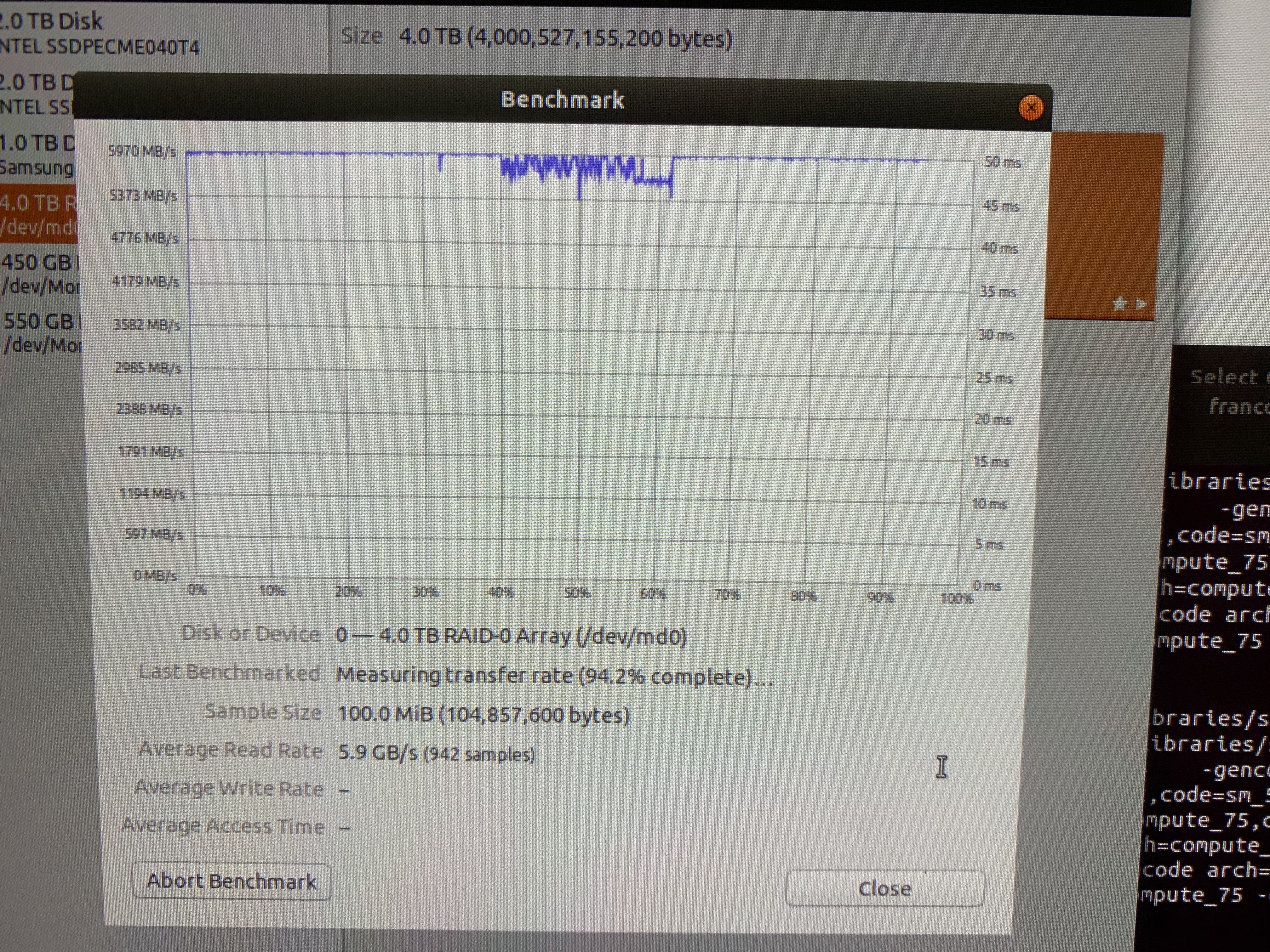

The goal is to use the inference of voice intonation using FFT, then, use them to understand the intent of the user by extracting the feeling, while having learned from this user for hours in the back ground. That input is used as one of the input of the deepchatbot. In theory, that should be able to catch derision and few other things that trend to break the realism of chat bots. I have spend my day trying to by pass the output of the 1st sentiment inference and connect the second to last layer to the Chatbot 2nd level layer, this is rock n roll on the CPU and I had to add a super fast SSD for the testing … this stuff consumer SSD bandwidth … that should do:

Voices + text and stored FFTs … recipe for warming up the house 😉