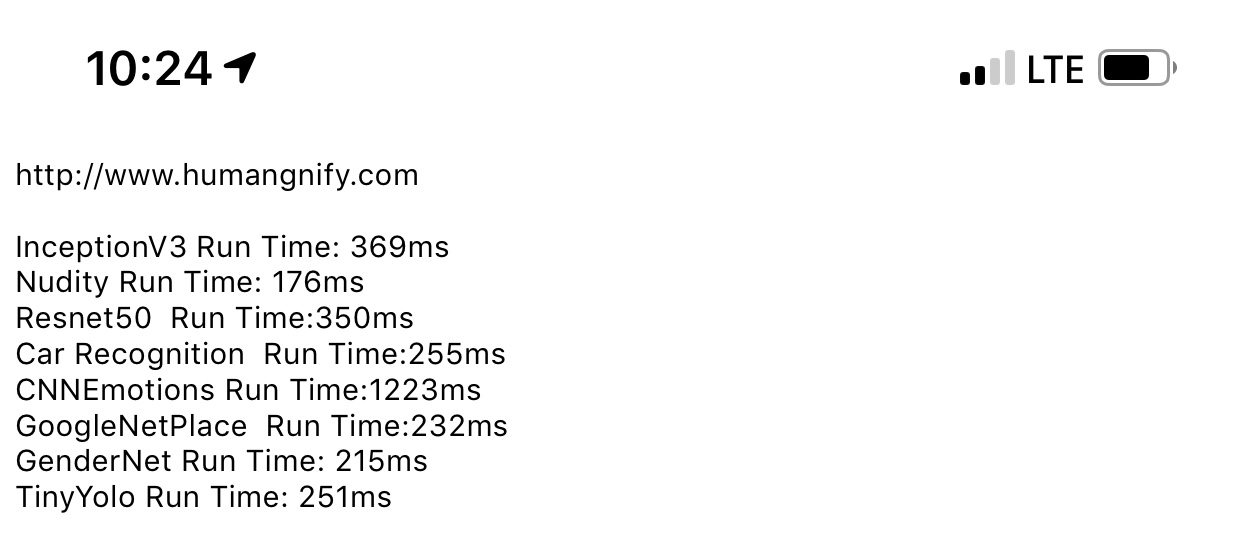

The speed of my own version of Tiny Yolo is remarkable … it is beating a Xeon skylake easily in term of instantaneous delivery latency. I think I still can get it down 10% more, but the iPhoneXsMax is doing good with its CoreML engine.

I noticed a little drop once again of the confidence of the prediction compare to the version running on the GPU on the iPhone 7 and co.

Latency of the 1st access is high (600ms to 900ms), but after the model is loaded into the CoreML engine, the latency is very consistent at around 200msish.

Automatic Quantization of apple tools is pretty cool, I did not find a neuronal net, except deepspeech2 that stop converging on a proper prediction, I still have to find the tf.nn operation that causes this.