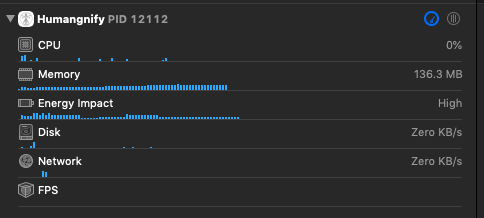

So, there is the memory profile of 6 ran inferences on iOS12 on the iPhoneXsMax

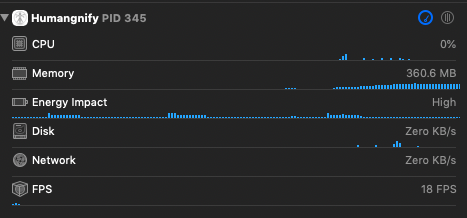

here is the memory profile of 6 inferences ran on iPhone X

The iPhoneXsMax seems to be storing the inference models into something that is NOT the main memory, it is all functional, but the amount of memory used is a LOT smaller.

It can be due to 2 things:

- The Phone XsMAX uses less precision when storing the Neural Net

- The hardware Neural engine stores it inside itself.

This is very interesting, because the I have allocated 26 differents inferences, and the increase of memory usage is very mininum, while on iPhone X, it was surging almost linearly with the size of the model stored side. This is a very good news, as we will be able to pack a huge number of Machine learning inferences, without exploding in memory size, giving the app a chance to stay in memory and process some ML inference in the back ground, you just need to make sure it is not too heavy. Just verified, we can run those inference on data fetched in background task.

The iPhoneXs family used about half of the memory required by other iOS devices, it is remarkable.

Francois Piednoel.