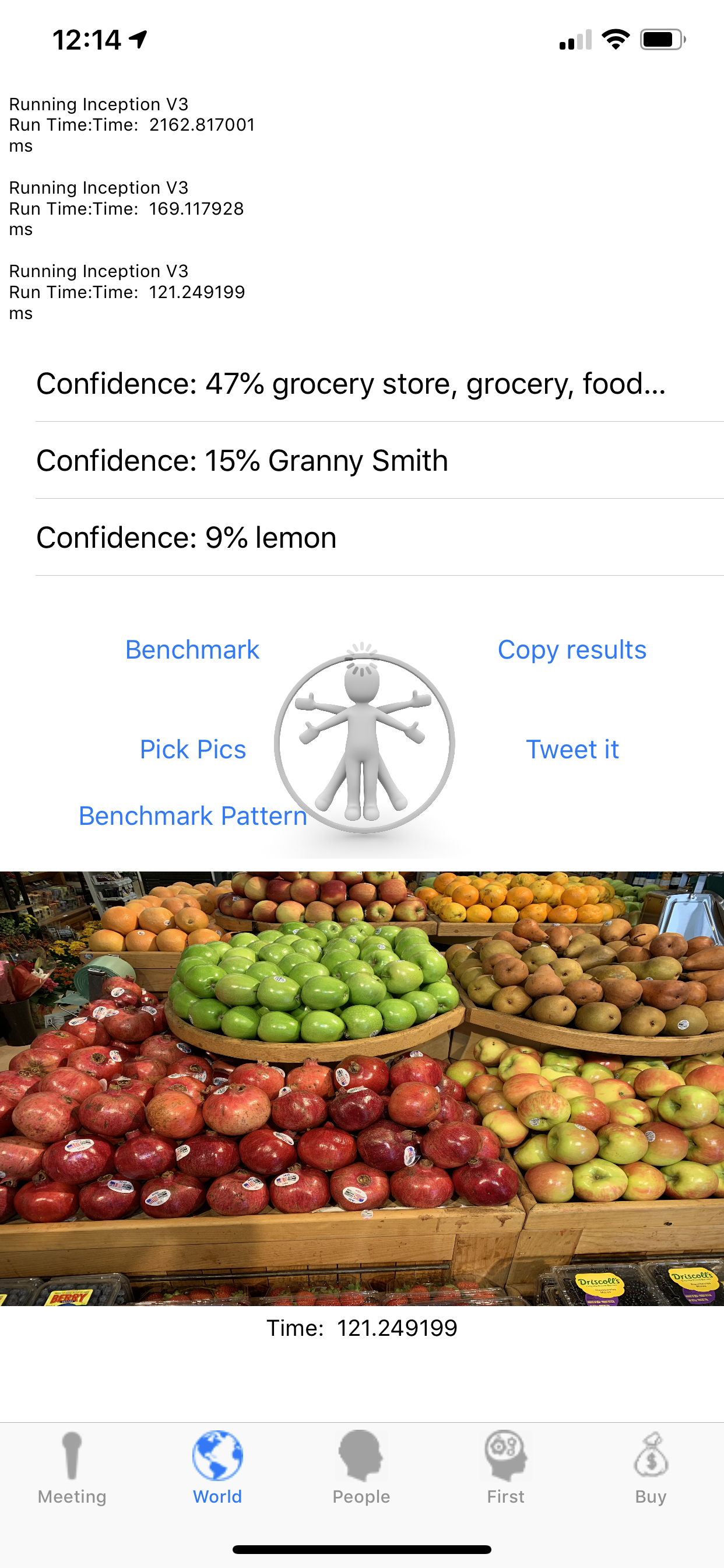

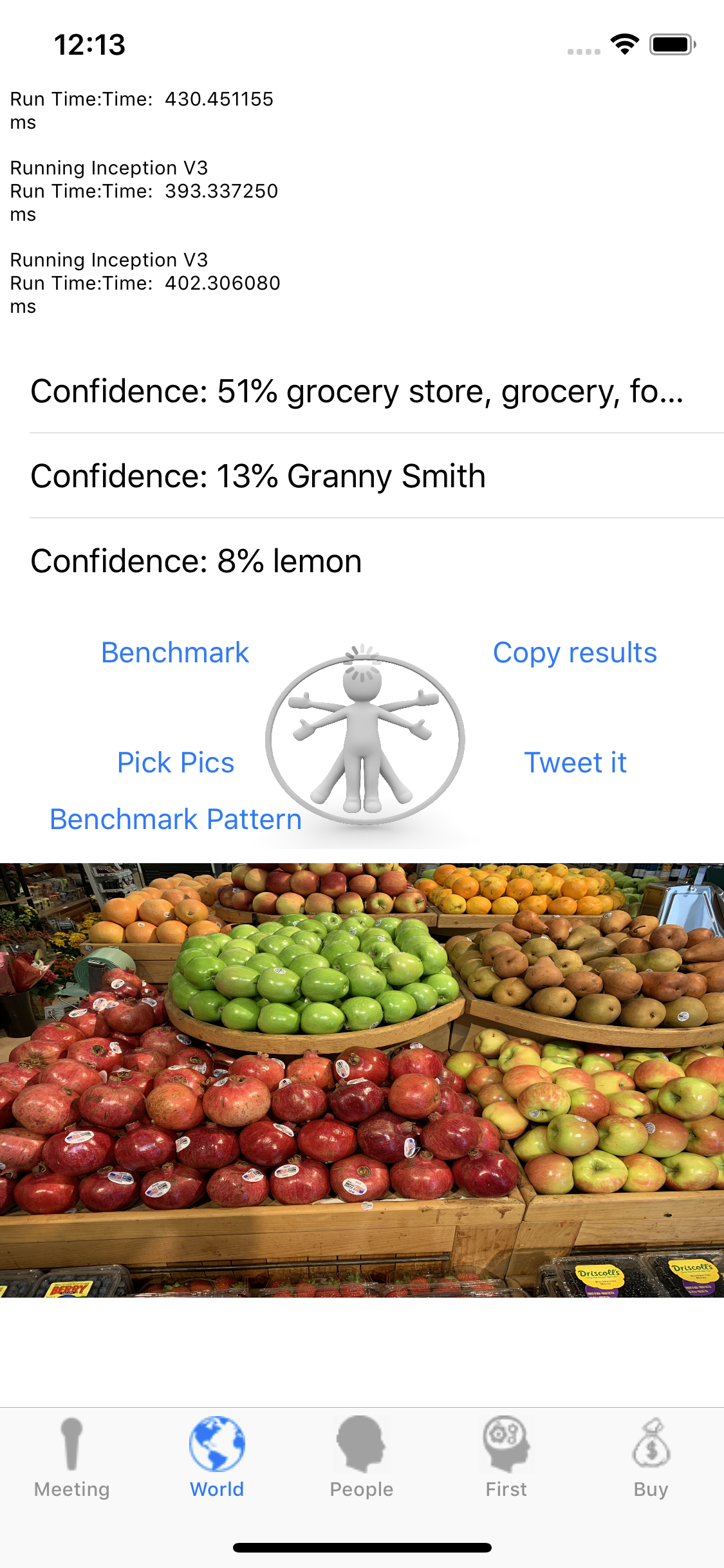

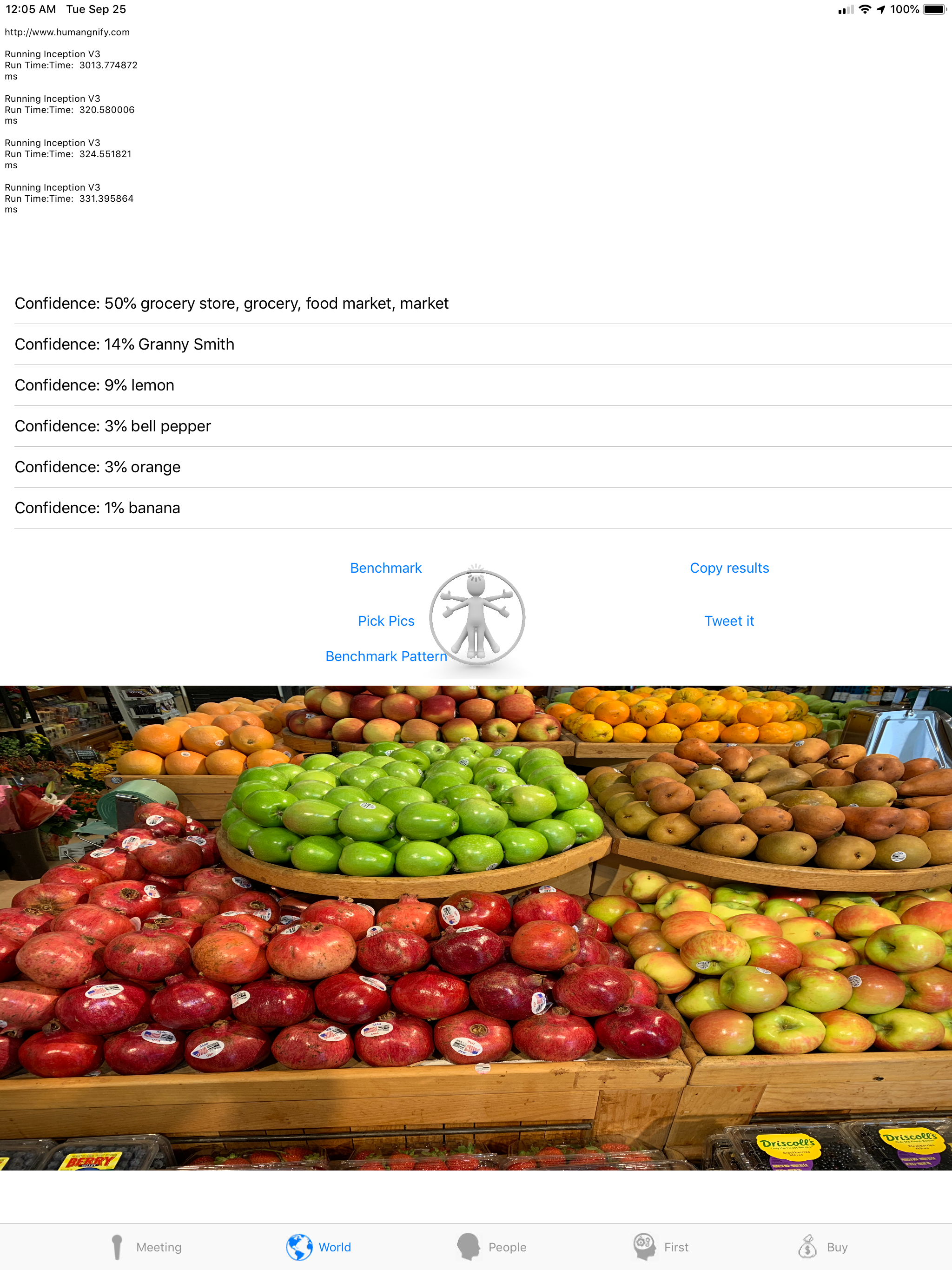

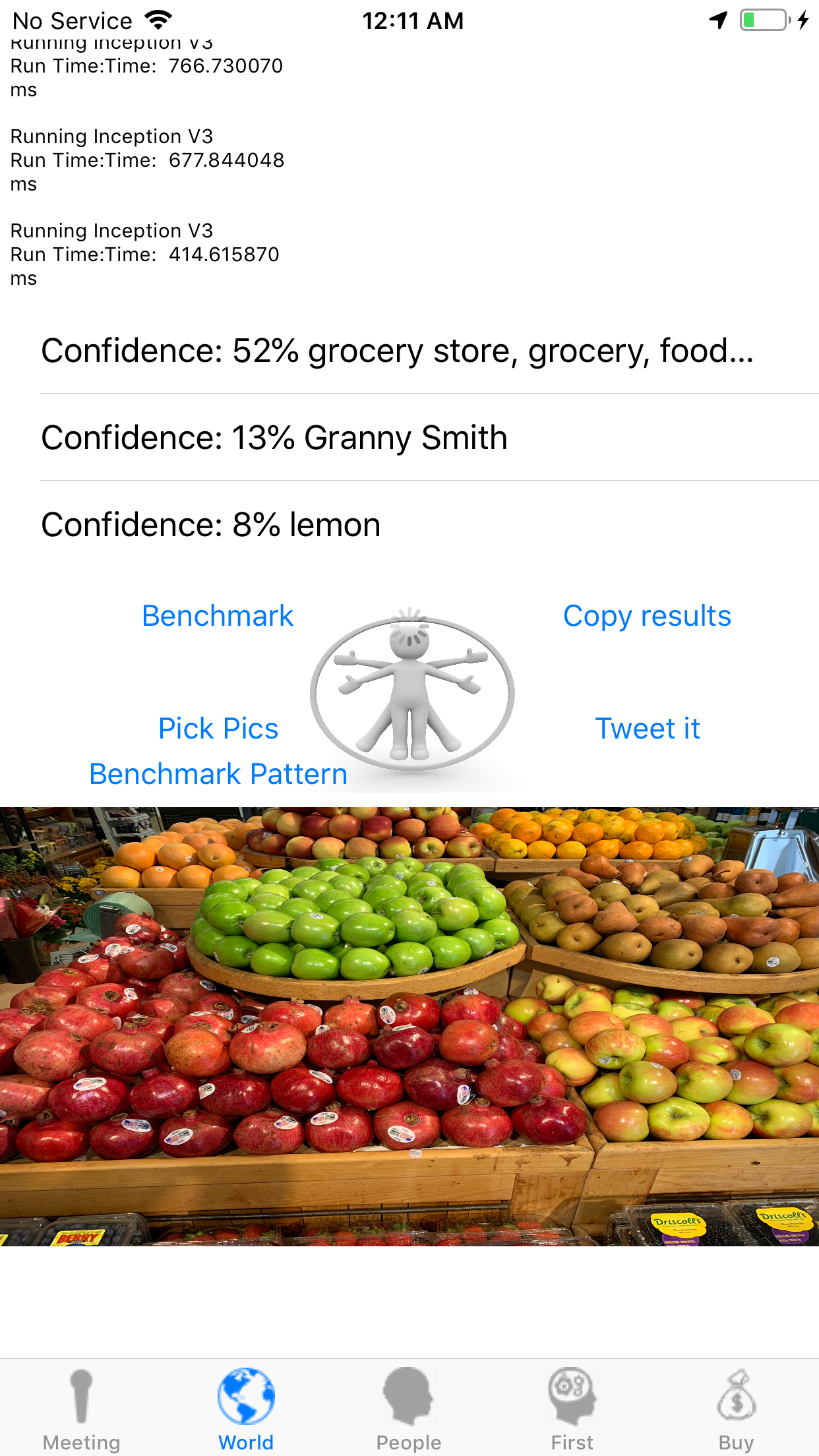

So, I still have something bothering me, the Deep neuronal networks don’t give exactly the same results across the different hardware accelerations … so, I need to investigate the differences, it gets the same confidences orders and subjections , so, it is probably a problem of rounding, I need to reverse the hardware a little bit more, But here it is … Keep in mind that it does have the warming up runs before. The iPhoneXsMax seems to be pretty slow at turning on the hardware acceleration, it is obviously power gated, and since it is a large silicon piece, the voltage rising needs to be done carefully (I speculate here, but I expect Apple to fix this with better libraries fetching the power on of the cluster early if CoreML is being initialized.

From left to right, the iPhone XsMax, iPhoneX, iPad Pro Gen1, iPhone6sPlus.

Apple did not lie to you, on inception V3, you see a nice boost from the iPhone X, here, it is almost 4X … You can see that “warming up on the CPU and GPU is different, the hardware does accelerates slower, it takes more warm up to get the CPU and GPU going on system not equiped with a NPU.

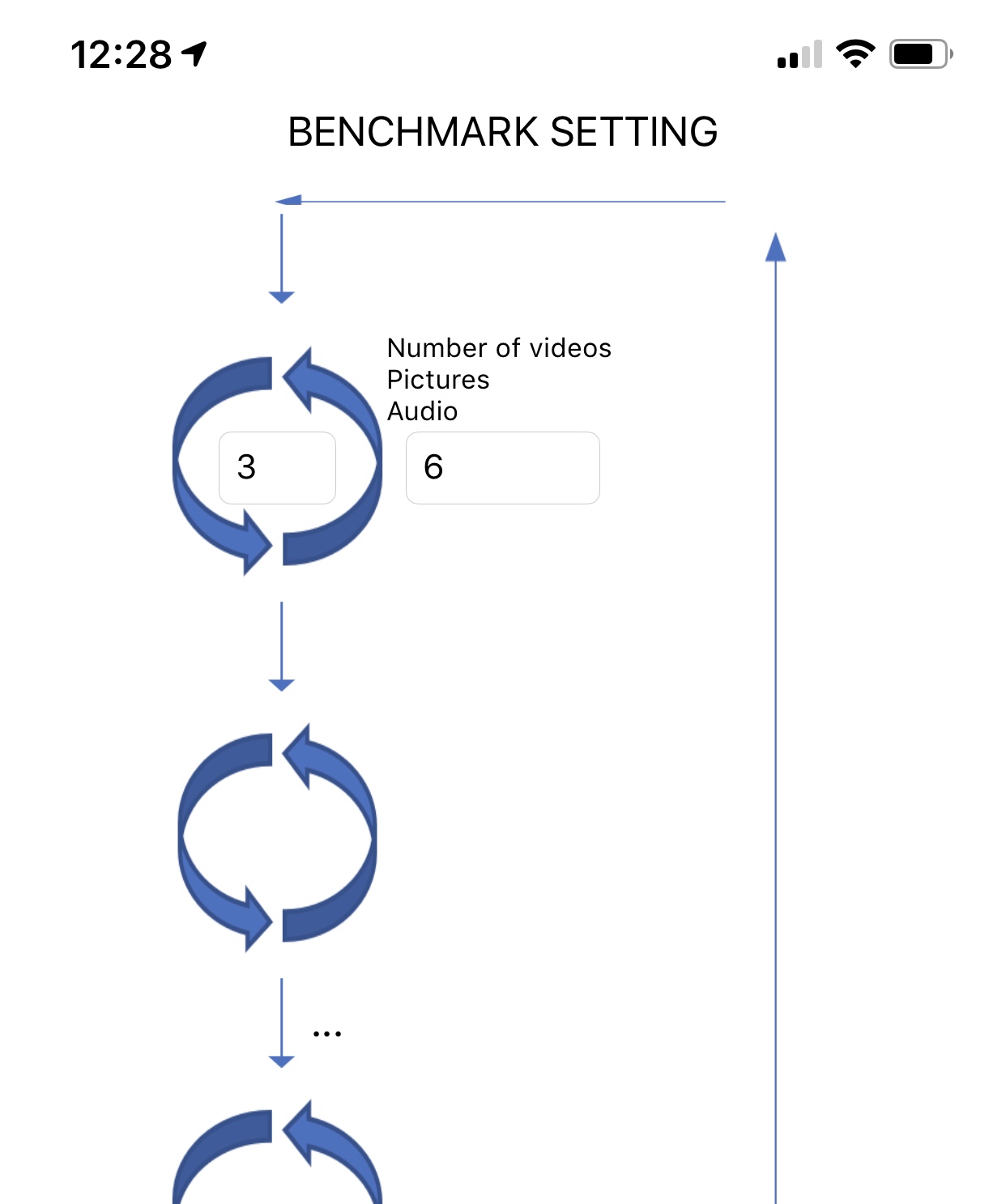

For This reason, I ll include in the benchmark, the capability to set up how many times you will loop on the same image/Audio/Video, and how many pictures/Audios/Videos are included into one run. It will allow the programmers using this data to know how long to expect before the subsystem is going, and plan the UI to go with it.